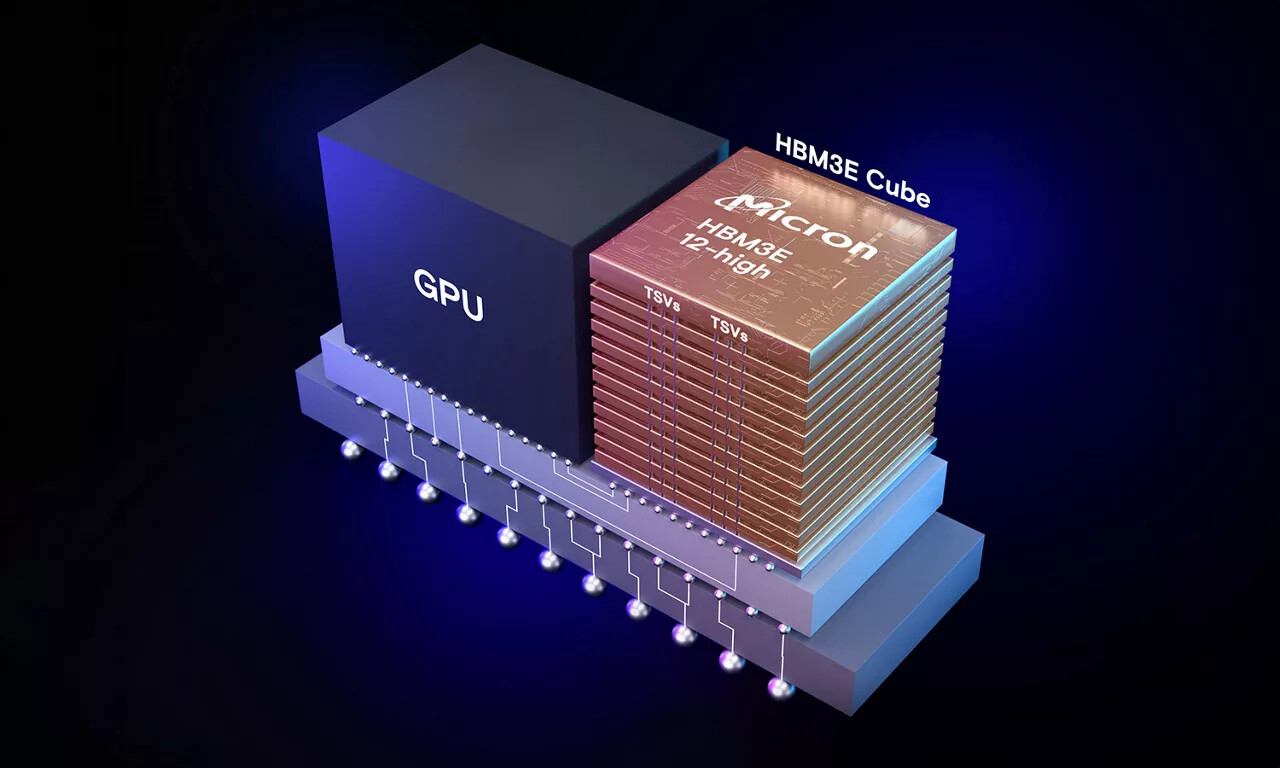

AI workloads are constantly evolving, and memory bandwidth and capacity are becoming increasingly important for system performance. The latest GPUs in the industry require high-performance high bandwidth memory (HBM), significant memory capacity, and improved power efficiency. Micron is leading the way in memory innovation to meet these requirements and is now shipping production-ready HBM3E 12-high units to key industry partners for qualification across the AI ecosystem.

Micron's HBM3E 12-high 36 GB offers lower power consumption compared to competitors' 8-high 24 GB options, despite having 50% more DRAM capacity in the package. This increased capacity allows larger AI models to run on a single processor, leading to faster insights and improved system performance. Micron's HBM3E 12-high 36 GB also provides over 1.2 terabytes per second (TB/s) of memory bandwidth at a pin speed greater than 9.2 gigabits per second (Gb/s), ensuring maximum throughput with minimal power consumption for power-hungry data centers.

In addition, Micron's HBM3E 12-high units feature fully programmable MBIST capabilities for improved test coverage and expedited validation, ultimately enhancing system reliability and speeding up time to market. Micron is now shipping these units to key industry partners for qualification, showcasing the company's commitment to meeting the data-intensive demands of the evolving AI infrastructure.

Micron is also a proud partner in TSMC's 3DFabric Alliance, collaborating closely with memory suppliers, customers, and outsourced semiconductor assembly and test (OSAT) players to integrate HBM3E into AI systems. This partnership highlights Micron's dedication to driving innovation in the semiconductor industry.

Looking ahead, Micron is already planning for the future with its HBM4 and HBM4E roadmap, ensuring that the company remains at the forefront of memory and storage development in the data center technology space. For more information, visit Micron's HBM3E page.