MangoBoost Sets New Industry Benchmark with MLPerf Inference v5.0 Submission

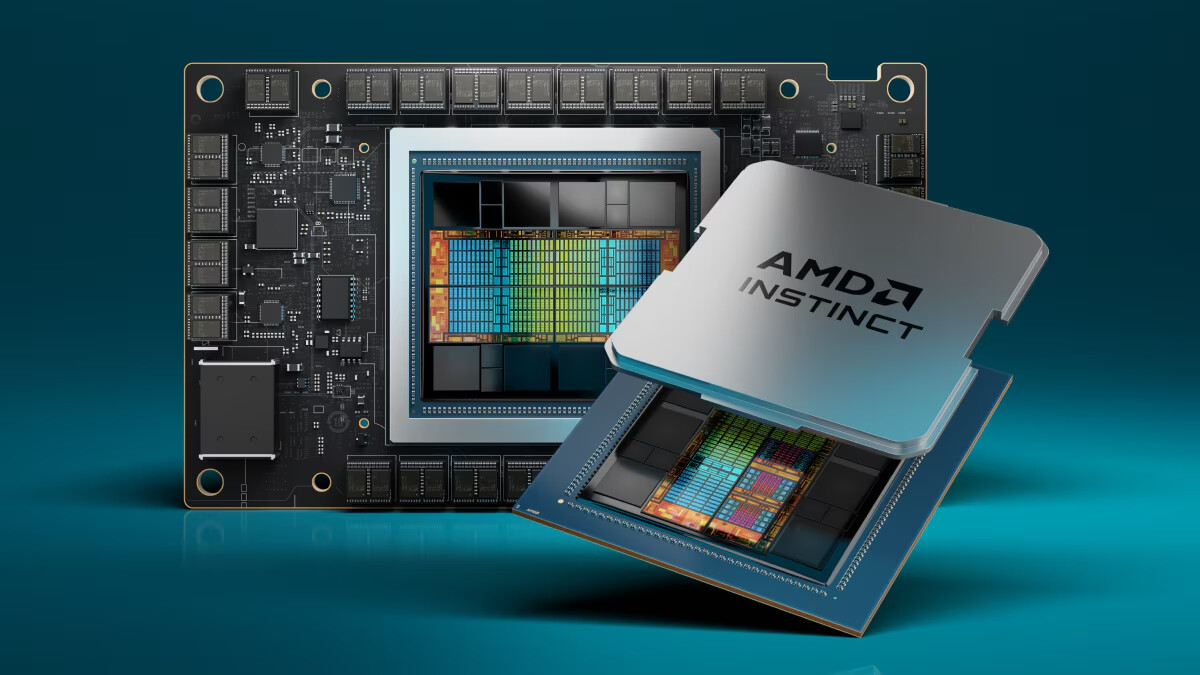

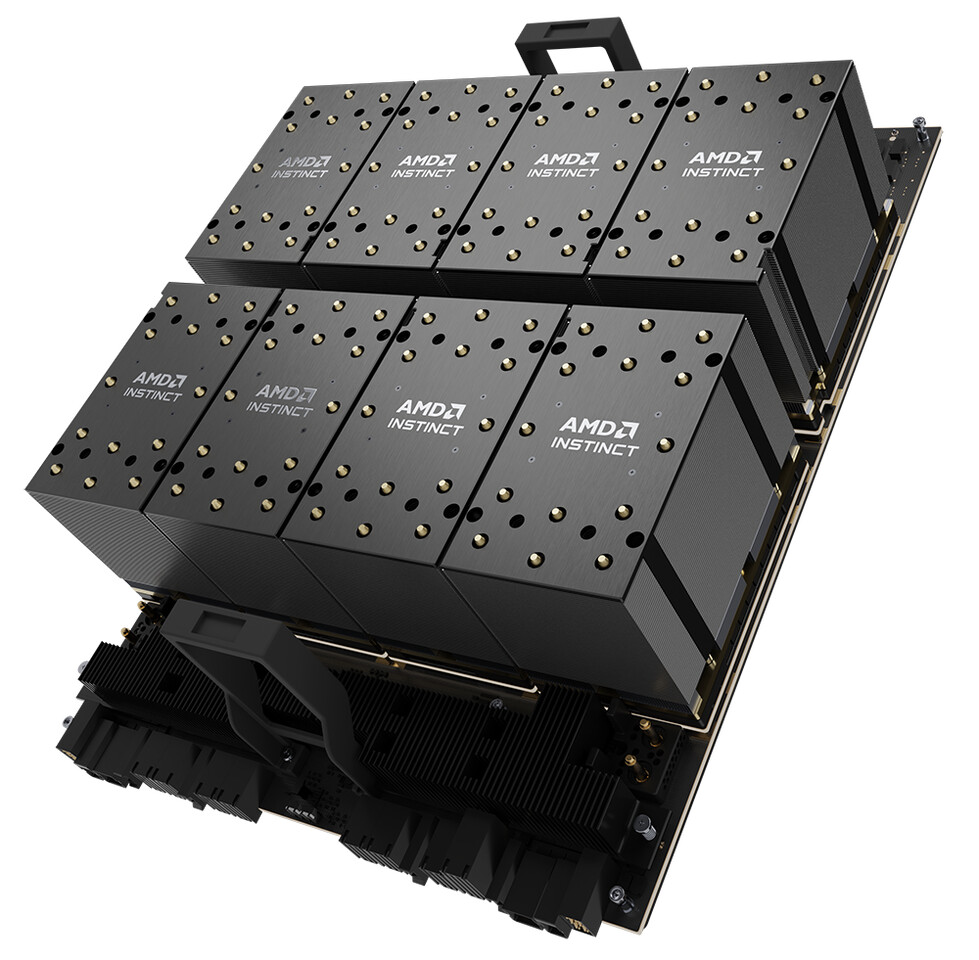

MangoBoost, a company specializing in cutting-edge system solutions to optimize AI data center efficiency, has achieved a significant milestone with its latest MLPerf Inference v5.0 submission. The company's Mango LLMBoost AI Enterprise MLOps software has demonstrated exceptional performance on AMD Instinct MI300X GPUs, setting a new record for Llama2-70B in the offline inference category. This achievement represents the first multi-node MLPerf inference result on AMD Instinct MI300X GPUs, surpassing previous results from competitors using NVIDIA H100 GPUs.

Unmatched Performance and Cost Efficiency

MangoBoost's MLPerf submission showcases a 24% performance advantage over the best-published result from Juniper Networks, utilizing 32 NVIDIA H100 GPUs. Mango LLMBoost achieved 103,182 tokens per second (TPS) in the offline scenario and 93,039 TPS in the server scenario on AMD MI300X GPUs, outperforming the previous best result on NVIDIA H100 GPUs. Additionally, Mango LLMBoost + MI300X offers significant cost savings, with AMD MI300X GPUs priced lower than NVIDIA H100 GPUs, resulting in up to 62% cost savings while maintaining top-notch inference throughput.

Mango LLMBoost: A Scalable and Hardware-Flexible MLOps Solution

Mango LLMBoost is an enterprise-grade AI inference software that offers seamless scalability and cross-platform compatibility. It supports over 50 open models, including Llama, Qwen, and DeepSeek, with easy deployment via Docker and OpenAI-compatible APIs. The software is cloud-ready, available on major cloud platforms, and can also be deployed on-premise for enterprises requiring full control and security.

Key capabilities of Mango LLMBoost include:

- Auto Parallelization - Efficient distribution of large models across GPUs and nodes.

- Auto Config Tuning - Optimization of runtime parameters based on workload characteristics.

- Auto Context Scaling - Dynamic adjustment of memory usage to maximize GPU utilization.

- Auto Disaggregated Deployment - Flexible deployment across multiple inference stages.

Collaborating closely with AMD, MangoBoost has unlocked the full potential of MI300X GPUs, achieving record-breaking results through the ROCm software stack. This partnership has led to a scalable and efficient AI inference solution that can be deployed across various configurations with ease.

Extending Performance Leadership to AWS and Beyond

Besides the MLPerf results, Mango LLMBoost has been extensively tested on different cloud and on-premises setups. On an 8×NVIDIA A100 GPU setup from AWS, Mango LLMBoost outperformed competitors across multiple model sizes, showcasing superior performance and cost-efficiency. The software leads in terms of GPU cost per million tokens, reducing inference costs significantly compared to other solutions.

Expanding AI Infrastructure Solutions

In addition to the Mango LLMBoost software, MangoBoost offers hardware acceleration solutions based on Data Processing Units (DPUs) to enhance AI and cloud infrastructure, including Mango GPUBoost, Mango NetworkBoost, and Mango StorageBoost.